My optimized rig: nearly 2 Mh/sec @ only 680 watts of power.

This fourth installment of our litecoin mining guide will focus on getting the most out of your hardware—finding the sweet spot between maximum performance and acceptable power usage (and noise/heat generation!).

The tweaks that I outline in this article are applicable whether you’re using Linux or Windows. If you’re using the hardware that was recommended in the first part of this guide (or very similar hardware), you should expect to see a performance increase of 10% or more in your litecoin mining hashrate, compared to the baseline cgminer settings that were given in our Linux and Windows setup guides.

In addition to increasing your mining speed, I’ll also show you how to set up a backup mining pool to automatically failover to in case your primary pool becomes unavailable. There is nothing worse than having your mining rig(s) sit idle because your pool went down!

Click “read more” for our mining performance optimization guide!

Build a Litecoin Mining Rig, part 4: Optimization

I want to preface this guide with a strong recommendation that anyone following it have access to a Kill-A-Watt (or similar device). Many of the tweaks outlined in this article will change the amount of power that your mining computer uses, and without the ability to measure power consumption, you have no way of making an informed decision about whether or not each change actually helps your bottom line. Electricity isn’t free (for most of us, anyway), and a small increase in hashing performance that costs an extra 150 watts may actually ending up costing you in the long run. Amazon sells kill-a-watts for ~$17

, which is cheaper than I’ve seen them anywhere else, and the device will likely pay for itself many times over.

With that said, if you don’t have a kill-a-watt, but you do have the same hardware that I recommend in the first part of this mining guide, then you can be relatively confident that all of the tweaks below will result in positive monetary gains for you (at current litecoin prices and mining difficulty, anyway).

Overclock your GPU(s)

Overclocking your video cards will likely result in the largest overall mining gains, and it can be done right from within cgminer in most cases. Some cards are more overclockable than others (the MSI 7950 that I recommend is one of the best), but most should yield at least some gain by tweaking clock speeds upward.

Disclaimer: overclocking beyond factory default settings may cause system instability, harm your hardware, and/or invalidate your warranty. The author assumes no responsibility if you fry your computer.

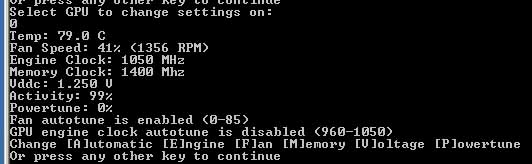

The best way to find your ideal clock speeds is to start cgminer, and press “G” while it is running to open the (G)PU menu. From there, press “C” to (C)hange settings (and then press “0” to select your first GPU if you have more than one).

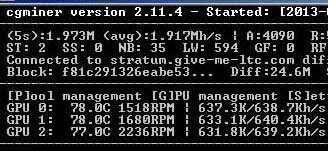

cgminer GPU settings: use the (E)ngine and (M)emory options to change your video card’s core and memory clock speeds, respectively.

You should be looking at a screen similar to the one shown above. From here, use the “E” and “M” keys to slowly push your GPU core and memory clock speeds upwards. I recommend increments of about 25Mhz at a time, with a 1-2 minute pause after each change to observe the effect on hashrate performance and stability. Don’t forget to check your kill-a-watt for changes in power consumption, too. For best results, keep your memory clocked about a third higher than your core speed.

You’ll know you’ve gone too high when one of the following occurs:

- Your GPU (or computer) simply crashes or hangs. You may require a system reboot at this point (a cold boot is best).

- You start to see hardware errors (watch the “HW” column next to the GPU that you’re overclocking—if it’s higher than zero, you’re probably too high).

- Your last clock speed increment brought only very small (or no) hashrate gains compared to the previous increases.

When any of these things happens, drop the clock speeds back down a bit and try to find the sweet spot where your system is stable and hashrates are highest. When you’ve found settings that you’re happy with, add them to your cgminer startup script (mine_litecoins.sh in Linux, or mine_litecoins.bat in Windows) with these switches:

--gpu-engine [YOUR CORE CLOCK SPEED] --gpu-memclock [YOUR MEMORY CLOCK SPEED]If you’re using the recommended hardware, then 1050 core / 1250 memory should give you excellent results while still allowing you to undervolt significantly. If you’re using Windows and MSI Afterburner, you should set your Afterburner clock and memory speeds to match your new cgminer settings.

Tweak cgminer settings for performance and noise management

Another easy way to increase hashrates is to modify your cgminer settings. The easiest and most obvious setting to change is the intensity, which controls how aggressively your GPUs are scanned by cgminer. If you’re using 7950 GPUs, go ahead and increase this to the maximum of 20 (on other GPUs, 20 may result in lower hashrates, or errors). You can do that by simply changing the -- I 19 in your cgminer startup script to -- I 20. Again, monitor cgminer’s output for awhile with the new setting in place to make sure that your hashrate moved in the right direction, and everything is stable.

If you don’t have the luxury of being able to tuck your mining rig someplace where it is out of earshot, you may have noticed that the fans are quite loud at default settings. To help control the noise, add these settings to your startup script:

--temp-target 80 --auto-fanThis tells cgminer to automatically control all of your GPU fans, and that 80C is the target temperature. The fans should work at about half speed or less whenever your GPUs are below your target, which should make them quite a bit less noisy. Using these settings, the recommended hardware, and the plastic crate build I described in part 1 of this guide, my GPUs hover around 78C at fans speeds of 30-40% (the ambient room temperature is kept at 72F). The rig is certainly audible, but it’s a far cry from the jet engine that it sounds like if the GPU driver is allowed to manage the fans.

Set up a failover / backup mining pool

Nothing will kill your mining performance faster than an unavailable pool. It doesn’t matter what your potential hashrate is if your miner isn’t constantly being fed work to do!

I highly recommend signing up at a second mining pool so that you can use it as an automatic failover whenever your primary pool isn’t reachable. I’m currently using give-me-ltc as my primary pool, and Coinotron as my backup, and that combination has resulted in no gaps in my mining time in over two weeks. Whatever you do, just stay away from notroll.in and do your research on the pool(s) that you choose!

To tell cgminer about your backup pool, simply add this to your startup script, at the end (substitute the pool URL/port and your credentials!):

--failover-only -o stratum+tcp://backup-pool.com:3333 -u user -p passwordNow you’re covered if your primary pool goes down for whatever reason. Whenever it does come back up, cgminer will switch back to it automatically.

You can actually add as many backup pools as you want by simply listing more pools after the “–failover-only” switch; cgminer will use them in the order that they’re listed.

My cgminer startup settings

If you’re using the same hardware as me, and just want to copy & paste my cgminer settings (remember to substitute your pools & login credentials, though!), here they are:

cgminer --scrypt -I 20 -g 1 -w 256 --thread-concurrency 24000 --gpu-engine 1050 --gpu-memclock 1250 --gpu-vddc 1.087 --temp-target 80 --auto-fan -o stratum+tcp://stratum.give-me-ltc.com:3333 -u [YOUR USERNAME] -p [YOUR PASSWORD] --failover-only -o stratum+tcp://coinotron.com:3334 -u [YOUR USERNAME] -p [YOUR PASSWORD]

The “–gpu-vddc 1.087” switch tells cgminer to attempt to undervolt all GPUs to 1087 mV, but whether or not it actually works depends on your card’s BIOS and driver. In Windows, using MSI Afterburner gives you a much better chance of successfully controlling GPU voltage, but unfortunately in Linux you’re probably out of luck if cgminer can’t do it (update 10/2013: undervolting is now possible in linux as well).

With these settings and the hardware I recommended, you should get at least 1940 kH/sec in cgminer. Power consumption should be around 720 watts in Windows (after undervolting), and slightly over 800 watts in Linux. Individual results will vary a little bit, but those are realistic expectations.

Some cards (including the MSI that I recommend) can definitely be pushed more than these clock settings, especially at stock voltage settings (you may also want to check my FAQ for settings for some other popular mining cards). Whether or not the extra heat/noise/power consumption makes the corresponding hashrate increase worthwhile is up to you to determine. Which brings us to:

Analyzing the cost/benefit of different setups

Perhaps you’ve found that you can push your particular 7950 to 1150Mhz core / 1575Mhz memory clock speeds, provided you leave the voltage at stock settings. This nets you an additional 30 kH/sec of hashing speed at a cost of ~50 additional watts. How can you decide whether or not that is a good trade-off?

There are a few handy online calculators that are built to answer exactly these kinds of questions. Here is the best one that I’ve come across. Simply plug in your information, and the calculator will tell you how much daily (or weekly, or monthly, etc) profit you can expect. Change the values to other configurations that you’re considering and watch the profit number go up or down.

In the next and final installment of the litecoin mining guide, I’ll post a mining FAQ and some other tidbits that didn’t fit anywhere else. Until then, thanks for reading, and feel free to leave questions or comments!

April 22nd, 2013

April 22nd, 2013  CryptoBadger

CryptoBadger  Posted in

Posted in  Tags:

Tags:

What are all these options like “auto-fan” and “gpu-engine”? When I use them with cgminer I get told that no such options exist. Are these features Windows only? Or am I just compiling cgminer with the wrong options?

The best way to answer your questions is to have you go this website and read first:

http://www.webopedia.com/TERM/G/GPU_clock.html

Then it would be good to know your hardware setup and if you followed the guide from beginning to end before you tried to do mining.

Sorry for the naivity of this…

Will this rig allow for the mining of other c/currencies or task specific to lite?

thanks

This rig will allow you to mine any scrypt based coins. pick your favorite: http://coinchoose.com/litecoin.php

Hey Guys, I’m New to this, I tried every possible settings in cgminer 3.7.2 and the most I can get is about 645/Kh/s. Some other people have posted 750/Khs.

*****This is my setup.****

64GB SSD

GIGABYTE GA-990FXA-UD5 AM3+

CORSAIR XMS3 8GB (2 x 4GB)

4 x 100363L Radeon R9 280X cards

AMD Sempron 145 Sargas 2.8GHz

Thermaltake Toughpower Grand TPG-1200M 1200W

**** cgminer setup ****

setx GPU_MAX_ALLOC_PERCENT 100

setx GPU_USE_SYNC_OBJECTS 1

cgminer.exe –scrypt -o stratum+tcp://stratum-us.doge.hashfaster.com:3339 -u xxxxx -p xxxxx -I 19 -g 1 -w 256 –thread-concurrency 24000 –shaders 2048 –auto-fan –gpu-fan 30-75 –temp-cutoff 90 –temp-overheat 85 –temp-target 72 –expiry 1 –scan-time 1 –queue 0 –no-submit-stale

*** Driver ***

AMD-APP-SDK-V2.7

Driver Version: 13.251.131206a

Catalyst 13.12

I use these settings with my 1.2V locked MSI R9 280X cards. this gives me about 680 kHashes/s and 70-85C temperatures (better cooling is still incoming).

One warning though: this uses about 1200-1250 watts together with my other components so it might overload your PSU. (from tests it seems my other components(mobo, hdd, CPU, fans) use about 175-250 watts).

Just add one card at a time and see what it does to your power usage and if it’s safe to use these settings.

–gpu-fan 85 –temp-cutoff 90 -I 13 –gpu-engine 1020 –gpu-memclock 1500 -g 2 -w 256 –thread-concurrency 8192

Let me know if it works for you 🙂

-I 13 -g with those cards for sure … Lower temps and higher hashrates, win/win … You can start undervolting too once you find your optimal settings … I’m running 280Xs at about 1.075v each and getting 760 average and stable, low 70s for temps …

Expiry and Scantime Vs. Time to Block Targets while solo mining. HELP

I’ve been seeing a lot of conflicting Info on –Expiry and –Scantime while solo mining with CGminer. I’d like to start of by saying that I’ve successfully been solo mining using the –failover-only flag to pull stratum work from a pool then using it to solomine to my wallet. However –scantime and –expiry still seem to elude my understanding. In total part due to conflicting info on the web. Lets take for example FASTCOIN, with a Block target set at 12 seconds and FEATHERCOIN with Block Target of 2.5 minutes. Many of the tutorials have set the -scantime=1 –expiry=1 no matter what the Block target is. This seems counter intuitive to me as this could possibly dump work on a block before it is has solved by other miners or myself. I set my –expiry time on Fastcoin to 12 seconds, the same as its Block target and to 150 seconds on Feathercoin. My question is how do I set scantinme and expiry, while soloming, as to not dump work that I could possibly solve while, with –expiry=1 and not keep working on blocks that might have already been solved with –expiry=12 or150? or does CGminer automatically detect new blocks if scantime is set to 1 second. This still confuses me

i’m having a problem descibed here: https://litecointalk.org/index.php?topic=15412.new. anybody helps? best!

Hi, I wanted to see how much cud I get with mining on r9 290x for example. (Hash rate of 750-800, I pay 0.68 ct for kw/h

But here

http://dustcoin.com/mining

I dunno what to write by the scrypt.

Any help?

There is also a field for power by the script. What to write in?

Thanks

Forget it. I found it out.

Guys if I would let my 3 titans run full time 1 mounth I’d have a bill of 800$ 😮 !!!!!!

With my four Sapphire 7950 Dual-x I’m getting about 562 on three of the gpu’s and 529 on the fourth one. Tried increasing E and M but not a darn thing changed. Also tried increasing and lowering intensity but no affect at all. Temps are between 61 and 71 so these cards can be pushed further.

Running Xubuntu 12.04.4 and cgminer 3.7.2.

I only went as far as the OCing guide here and since I couldn’t get any increases in hash rate then I think it’s pointless to go further with this guide until I get over this hurdle first. I’m going to try going back to Xubuntu 12.10 again and see what happens.

Some time ago AMD introduced boost mechanism for their cards (following NVIDIA that did it first tome in their 680 model): the clock is automatically adjusted according to the balance between the need for processing power and card’s temperature. Cards with boost give worse results in mining. You really have to keep them in low temperatures for the best results. I have 3 Sapphire 7950 like you and I’m getting 600 Kh/s per card (core 1100 Mhz and mem 1575), but it requires keeping them under 65C. To do this I set fans to max (100%). Try this and see what happens.

Another important thing: don’t look only at hashrate. Look at oberall worker’s utility – “WU” figure in the upper right corner of Cgminer screen. That’s the most important indicator as it tells you, how many blocks are succesfully processed and sent back to the network. Higher intensity and thread concurrency give a better hashrate but also higher rejection rate (you can see it on the pool information screen). I get best overall results with I 18.

sowa007,

Thanks for the observation about 7950 cards. I am running two rigs with those cards. My temps vary from 62 to 72 and sometime up to 76 with voltages set at 1.094 on one rig and 1.131 on the second rig(can’t get this one to accept lower vddc). My WU: averages between 520 to 580 per card. My fans are set on “auto” and max temp at 75C.

My question is: If I set my fans to 100% wouldn’t that shorten the life of the fans and possibly damage the cards when they are running 24/7?

Hi Edwardf,

Running fans at 100% will surely reduce thier lifespan. Difficult to say how much. A lot depends on the kind of bearings (balls, sleeve, fluid). Running them at a stable rate has an advantage as well (they don’t like sudden shifts in rotation speed, so if you set a temp target and is it suddenly exceeded the fan would go full speed to compensate – that is not good for the bearing). However as a trade-off you surely get a longer lifespan for the card – if you run it 24/7 like most people do for mining, the difference between 75C and 65C will surely have some impact. Fans and coolers are cheap and replacing them is not a big problem (much smaller than replacing the processing unit or ram on the card). Maybe the most important thing – in 2-3 years time even the best current GPU will be obsolete, espesially for mining, because it will be outperformed by mid range cards. Just about 3 years ago NVIDIA’s top single GPU card was GTX 480. Here is how it compares to the current high-end 780ti: http://www.hwcompare.com/16416/geforce-gtx-480-vs-geforce-gtx-780-ti/. The resale price won’t be a lot either. Little difference if it is useless because its fan is broken or it’s just to slow. 7950 won’t be usefull for longer than 2 more years. Or shorter if ASIC devices come to scrypt coins as well 🙁

So, you have to see what kind of advatage – if any – you will have by reducing the temperature with the fans at 100%, how old is your card, how long do you think you’ll use it and judge for yourself (there’s noise as well, but not my problem because my rig is not in my flat).

I just chacked my rig: memory at 1450, not 1575.

AngusW>

I have two Saphhire 7950s and a powercooler. I ran into the same type of issues. Two cards are 600-630, the other card is around 570. Nothing I can do helps. All cards are running at 1020. Going to 1050 actually causes them to get lower hashrates. The average for this system is right around 1800kh/s, so I cannot complain. But thought I would share that you are not alone!

I used to have two R9 280X with an intensity of 20 and a Thread concurrency of 24000, then I format windows and when everything was setup again when I want to get ti running again it’s telling me there is not enough memory and it won’t let me set TC over 8192 and intesities over 13 get me HW errors. Any ideas why this is happening?

Some help optimizing the engine- and memclocks…. brute force mapping of the memclock/engineclock parameter space:

7950 (Sapphire)

http://imgur.com/sqOglp5

280X (ASUS)

http://imgur.com/PG7Q1aB