In the second installment of our DIY litecoin mining guide, we’ll look at how to install and configure Linux to properly mine with your GPUs at optimal settings. Don’t be dissuaded if you’ve never used Linux before—our step-by-step guide makes it easy.

Linux has a few advantages over Windows, including the ability to install to a USB stick (which means you don’t need a harddrive), lesser hardware requirements (you can get by just fine with less than 4GB of RAM), and simpler remote administration capabilities. Best of all, Linux is free! However, be aware that if you’d like to undervolt your GPUs to save power, Windows might be a better choice for you (update 10/2013: no longer true!).

If you missed the hardware portion of our guide, make sure to check it out first. Otherwise, read on.

Build a Litecoin Mining Rig, part 2: Linux Setup

So you’ve decided to use Linux to run your mining rig—a fine choice! If you’re still a bit nervous because you’ve a complete Linux newbie, don’t be. Simply follow the step-by-step instructions exactly as they’re written, and you’ll be fine. Even if you’ve never done anything like this before, you should be up and running in about an hour.

Step 1: Configure BIOS settings

Before we even get to Linux, make sure your mining computer’s BIOS settings are in order. Power on your computer, and press the “delete” key a few times immediately after power on. You should end up in the BIOS configuration area. Do the following, then save & exit:

- Change power options so that the computer automatically turns itself on whenever power is restored. The reason for this is two-fold: first, it’ll make sure that your miner automatically starts up after a power outage. Second, it makes powering the computer on much easier if you don’t happen to have a power switch connected to the motherboard.

- Make sure that your USB stick is first in the boot-up order (you may need to have a USB stick attached).

- Disable all components that you don’t plan to use. This will save a little bit of power, and since your miner will likely be running 24/7, it’ll add up. For me, that meant disabling onboard audio, the SATA controller, the USB 3.0 ports (I only had a 2.0 USB stick), the Firewire port, and the serial port.

Step 2: Install Xubuntu Desktop to your USB stick

Xubuntu is a lightweight version of Ubuntu, a popular Linux distribution. Most other distros should work just fine, but be aware that the GPU drivers require the presence of Xorg, which means server distros that don’t have a GUI will not work properly.

- Download the Xubuntu Desktop x64 v12.10 installation image.

- You’ll need to either burn the installation image onto a CD, or write the image to a second USB stick. If you use a CD, you’ll need to temporarily hook up a CD-ROM drive to your mining rig for the installation (make sure you temporarily enable your SATA controller if you disabled it in step 1!).

- Once you have the installation media prepared, you’re ready to install Xubuntu to the blank USB stick on your miner. Make sure your blank USB stick is inserted into a USB port on your mining, and then boot into your installation media. The Xubuntu installer should appear.

- Make sure to click the “auto-login” box towards the end of the installer; otherwise take all of the default installation options.

- If you’re using a USB stick that is exactly 8GB, the installer may complain about the default partition sizes being too small. Create a new partition table with these settings: 5500mb for root (/), 315mb for swap, and the remaining amount for home (/home). If you’re using a USB stick larger than 8GB, you will not have to do this.

- When the installation is complete, you should automatically boot into the Xubuntu desktop. Make sure to remove your installation media.

Step 3: Install AMD Catalyst drivers

Open a terminal session by mousing over the bottom center of the screen so that a list of icons appears. Click on “terminal” (it looks like a black box).

- Type the following commands (press “enter” at the end of each line and wait for Linux to finish doing it’s thing):

sudo apt-get install fglrx-updates fglrx-amdcccle-updates fglrx-updates-dev sudo aticonfig --lsa sudo aticonfig --adapter=all --initial sudo reboot - After your computer reboots, you can verify that everything worked by typing:

sudo aticonfig --adapter=all --odgt - If you see all of your GPUs listed, with “hardware monitoring enabled” next to each, you’re good to go.

Important: you may need to have something plugged into each GPU to prevent the OS from idling it. You can plug 3 monitors into your 3 GPUs, but that isn’t very practical. The easiest option is to create 3 dummy plugs, and leave them attached to your GPUs. They’ll “trick” the OS into believing that a monitor is attached, which will prevent the hardware from being idled. Check out how to create your own dummy plugs.

If you ever add or remove GPUs to your rig later, you’ll need to re-run this command: sudo aticonfig --adapter=all --initial

Step 4: Install SSH, Curl, and package updates

- Install SSH by typing:

sudo apt-get install openssh-server byobu

With SSH installed, you can unplug the keyboard/mouse/monitor (put dummy plugs into all GPUs, though) from your miner, and complete the rest of the installation from your desktop computer. Simply download Putty onto your desktop, run it, and enter the IP address of your mining rig. That should bring up a remote terminal session to your miner, which is more or less just like sitting at the keyboard in front of it.

If you plan to manage your mining rig remotely over the internet, you’ll need to forward port 22 on your router to your miner. Make sure that you use a strong Xubuntu password!

Setup should be quicker from this point, as now you can simply copy text from this webpage (highlight it and press control-C) and then paste it into your Putty session by simply right-clicking anywhere inside the Putty window. Neat, eh?

- Install Curl and package updates by typing (or copying & pasting into Putty) the following commands:

sudo apt-get install curl sudo apt-get update sudo apt-get upgrade

Step 5: Install cgminer

Cgminer is the mining software we’ll be using. If the first command doesn’t work, you’ll need to check out the cgminer website and make a note of the current release version. Substitute that in the commands below.

Update: Cgminer 3.7.2 is the last version to support scrypt—do not use any version after that! In addition, depending on which version you choose to use, you may receive an error complaining about libudev.so.1 when you try to run cgminer—you can find the fix for that here.

- Type the following:

wget http://ck.kolivas.org/apps/cgminer/2.11/cgminer-2.11.4-x86_64-built.tar.bz2 tar jxvf cgminer-2.11.4-x86_64-built.tar.bz2 - If everything was uncompressed successfully, we can delete the downloaded archive; we don’t need it anymore:

rm *.bz2 - Now check if cgminer detects all of your GPUs properly:

cd cgminer-2.11.4-x86_64-built export DISPLAY=:0 export GPU_USE_SYNC_OBJECTS=1 ./cgminer -n

Step 6: Create cgminer startup script

We’re almost done—now we just need to create a few simple scripts to control cgminer.

- If you’re still in the cgminer directory from the previous step, first return to your home directory:

cd .. - Type the following to create a new file with nano, a Linux text editor:

sudo nano mine_litecoins.sh - Type the following into nano (note where the places you need to substitute your own usernames!) :

#!/bin/sh export DISPLAY=:0 export GPU_MAX_ALLOC_PERCENT=100 export GPU_USE_SYNC_OBJECTS=1 cd /home/YOUR_XUBUNTU_USERNAME/cgminer-2.11.4-x86_64-built ./cgminer --scrypt -I 19 --thread-concurrency 21712 -o stratum+tcp://coinotron.com:3334 -u USERNAME -p PASSWORD - Save the file and quit nano, then enter the following:

sudo chmod +x mine_litecoins.sh

Note that the cgminer settings we’re using in our mine_litecoins.sh script correspond to a good starting point for Radeon 7950 series GPUs. If you followed our hardware guide, these settings will give you good hashrates. If you’re using another type of GPU, you’ll want to use Google to find optimal cgminer settings for it.

Also note that you’ll need to create an account at one of the litecoin mining pools, and plug your username and password into the script (the -u and -p parameters). I have Coinotron in there as an example, but there are quite a few to choose from.

Step 7: Create auto-start scripts

We want cgminer to automatically start mining whenever the rig is powered on. That way, we keep mining losses to a minimum whenever a power outage occurs, and we don’t have to worry about manually starting it back up in other situations.

- Type the following to create a new script and open it in nano:

sudo nano miner_launcher.sh - Enter the following text into the editor (substitute your Xubuntu username where shown!):

#!/bin/bash DEFAULT_DELAY=0 if [ "x$1" = "x" -o "x$1" = "xnone" ]; then DELAY=$DEFAULT_DELAY else DELAY=$1 fi sleep $DELAY su YOUR_XUBUNTU_USERNAME -c "screen -dmS cgm /home/YOUR_XUBUNTU_USERNAME/mine_litecoins.sh" - Save and quit nano, and then type:

sudo chmod +x miner_launcher.sh - Now we need to call our new script during startup; we do that by adding it to /etc/rc.local. Type the following to open /etc/rc.local in nano:

sudo nano /etc/rc.local - Add the following text, right above the line that reads “exit 0” (substitute your own username!):

/home/YOUR_XUBUNTU_USERNAME/miner_launcher.sh 30 &

Step 8: Create an alias to easily check on cgminer

We’re essentially done at this point, but you’ll probably want to manually SSH into your miner from time to time to manually check on your GPU temperatures & hashrates, etc. Creating an alias will make that easy.

- Type:

sudo nano .bashrc - Scroll to the end of the file, and then add this text above the line that reads “# enable programmable completion…”

alias cgm='screen -x cgm' - Save and quit out of nano.

That’s it—you’re done! You’ll probably want to test everything now. The easiest way to do that is to close your Putty session and power down your miner. Turn it back on and the following should happen:

- Your miner should boot into Xubuntu. This may take about a minute, depending on the speed of your USB stick.

- 30 seconds after Xubuntu has loaded, cgminer will automatically start and begin mining. You’ll probably notice the fans on your GPUs spin up when this happens.

- You should be able to SSH into your miner at any time and type cgm to bring up the cgminer screen. To close the screen (and leave cgminer running), type control-A, then control-D.

- If you ever need to start cgminer manually (because you quit out of it, or kill it, etc), simply type ./miner_launcher.sh

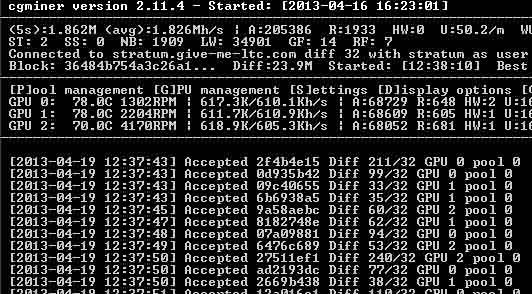

If all went well, you should see something like this when checking cgminer via your “cgm” alias.

Congratulations—you have your own headless linux litecoin miner!

The next section of our guide covers setup under Windows, and then we take a look at optimizing cgminer settings for better performance.

April 19th, 2013

April 19th, 2013  CryptoBadger

CryptoBadger  Posted in

Posted in  Tags:

Tags:

Hi!

I have problem when I download cgminer or wemineltc.com CPU miner.

It pops out that they have trojan.

How I should solve the problem???

Many antivirus programs flag the cgminer .zip file as malicious because it is sometimes installed without a user’s knowledge, so that a third party can surreptitiously mine on the user’s computer (and pocket the gains). Cgminer by itself certainly isn’t malicious.

Just make sure that you download cgminer from ckolivas’s site, and you should be fine.

Can anyone help then?

Thanks for the tutorial . Please could you help me how to display with intel integrated graphics and mininge with Radeon 7950 ?

You’ll just need to tell cgminer to not mine with your intel IG, which you can do via the

--deviceoption. For example, assuming that you have your integrated graphics adapter plus three other dedicated GPUs, the following will tell cgminer to use devices 0, 1, and 3 (device 2 will not be used):cgminer --device 0,1,3You’ll just need to determine which number corresponds to the integrated device in your system, and then omit that number from the

--deviceargument.followed this tut, only thing i might point out is xubuntu 12.04 amd 64 (precise) u have to

sudo apt-get install screen

that was only thing hanging me up for some weird reason

I’ve had a 5 GPU rig running stable for about 3 weeks.

Last night it started behaving weirdly…

Running the cgm alias I only saw two out of the five cards and the temperature and fan information were missing.

I was able to restore it to working order, but the real issue is that I have no idea what happened.

Could anyone give me some pointers on how to start troubleshooting issues?

Thanks!!

Are you still having problems? Maybe it has something to do with load balance on the psu?

https://forum.litecoin.net/index.php/topic,5193.msg38709.html#msg38709

I am considering building a 5 gpu ubuntu based rig, but it seems tricky. Did you have to do anything special to get it working? I know about the 1600w lepa psu, the 1x-16x powered risers and all that.

However, I read stuff like this:

https://bitcointalk.org/index.php?topic=257670.0

and I start having second thoughts. Granted this guy is running windows, but at the end of the thread it seems like it is the ASROCK 970 Extreme4 that may be having the issues regardless of operating system. There is another guy commenting on this blog, 4gun who has his doubts as well…

http://www.cryptobadger.com/2013/04/build-a-litecoin-mining-rig-hardware/comment-page-4/#comment-1890

Is this just a Windows problem these people are having? I don’t want to have to spend extra on a psu intended for 5 gpus if I am going to end up only being able to figure out how to run 3 or 4. Any hints? Frodo, you can confirm that you have indeed got 5 gpu running on the aforementioned Asrock mobo? I have only read online that it is “possible” to run 5 gpu on the Asrock 970, but found no real instances of someone actually doing it, so your input would be greatly valued. Cb, you said you know lots of people who run 5 gpu… any input you have would be great as well.

I am itchy to pull the trigger on these hardware components, but I don’t want to be riding on a train in vain. In light of what I am reading, I am now leaning towards just 4 gpu per rig with a lower watt psu, but in the end it would be much more cost effective to go with 5 if it seems doable. Maybe with a different mobo? I am going to keep researching, but I am hoping someone a little more seasoned can chime in here. I swear litebeers all around if we can get some discussion going.

I haven’t heard of anyone having any issues with 5 GPUs on the ASRock 970, as long as they stick to Linux. Four GPUs is the limit in Windows, however.

I can’t say that I have my own 5 GPU rig (I prefer 3-4 GPU rigs for heat/power reasons), but I have had comments from people that have successfully built linux-based rigs with five 7950 GPUs on the ASRock 970. You should be ok as long as you get a beefy PSU (The Lepa 1600w will be fine), use at least two powered risers, and use a large crate (and possibly a box fan).

I’m running solidly using the suggested hardware and 5 GPUs. It’s a beauty generating 3.15MH/sec.

My problem was that I was running this through the kill-a-watt to see the energy usage.

As the Kill-a-watt was failing it caused weird behavior. It’s speced for 15amps max which is very close to what you will be running. It was reading around 1500 Watts in operation…

So make sure you have a circuit that can handle 1500 Watt sustained. I’ve been lucky with my 15 amp circuit, but I’m not sure I’ll recommend this. I’ll be getting more juice shortly.

It is non trivial to undervolt under linux.

Anyway. Except for the kill-a-watt snafu, my 5 card ubuntu rig is solid. No issues at all.

Some advise:

1. Use 6 gallon crates.

2. Don’t use the Rubbermaid railcovers. You are covering a larger span and the cards will be hot resulting in U-shaped railcovers within hours of running and your cards potentially falling into the crate. I have a thread on this in Part 5. CryptoBadget suggest using 1/2 inch PVC pipes. I’m currently using a piece of wood covered with the railcovers… Want to move to the PVC.

I’ll get an electrician soon, at that point all machines going forward will be identical 5 GPU machines.

There seems to be a limit on 4 GPUs on Windows which could explain the issues some other guys have. You can undervolt easier in Windows. I have not done the analysis of what makes sense long term. (4 undervolted cards vs. 5)

I hope this helps!

Cheers!!

Thanks guys, really. It is reassuring to know that it can be done! It’s going to be a trip and a half for me though! I am really trying to bone up before I jump in.

I was planning on using a kill a wat… uhh guess I need to rethink that… but definitely going with a larger crate and upgraded support as per your recommendation.

I have good power lines to use, but they are at commercial rates. It is too bad that undervolting is such a drag in linux. Do either one of you guys know off hand if current market msi 7950 twin frozrs have a dual bios switch? Again this is a situation where I am finding slim and contrary info searching online… even on the MSI website. Either way I am probably just gonna go for it with the 5 gpus…. ah cha cha cha.

Oh, I forgot to ask. Are you running your rig through any sort of surge protection, Frodo?

Does the circuit breaker count??? 😉

This is a discussion I’ll have with the electrician once I get him in…

It seems that all consumer grade surge protectors and kaw meters are only rated to 15a. The only solutions I have come up with for a 5 gpu rig are putting an appropriately rated clamp meter on the line to check usage, and installing in line surge protection. I have also found expensive rack mount surge protector strips with higher thresholds. I am lucky that the lines I am using are 20a (so overloading the circuts are not a concern), but I will still have a problem with melted and burnt out kil-a-wats and surge strips.

I would love to hear about that discussion with the electrician! I hope it goes well.

I’m not an electrician, but I was curious to see what I could find and I came across this 20a surge protector. It looks like you’ll need an adapter plug (the plug on the unit is the 20a type), but it might suit your needs.

Yes, the current MSI TF3 cards do have a dual BIOS switch. It’s a tiny white switch on the top side of the card – you’ll have to look for it since the documentation doesn’t seem to mention it, but it is there.

Ended up with a new 100 amp subpanel with built in surge protection in the panel. Total of 6 20 amp circuits.

Got 3 rigs running 5 MSI Frozr 7950 at the moment.

Life is good!

Nice! Glad to hear it. My parts are on the way… the fun will begin shortly!

Any idea why this is not working?

kevin@miner:~$ sudo aticonfig –adapter=all –odgt

[sudo] password for kevin:

Adapter 0 – AMD Radeon HD 7900 Series

Sensor 0: Temperature – 78.00 C

ERROR – Get temperature failed for Adapter 1 – AMD Radeon HD 7900 Series

ERROR – Get temperature failed for Adapter 2 – AMD Radeon HD 7900 Series

I’m getting the same thing you are kevin, I just build my rig with the same motherboard and processor linked in this guide I also have 3 – 7950 sapphire HD’s. I typed the same command as you after reboot and got the same temp display error. Anyone else have any ideas? Much appreciated for the guide!

Just in case you don’t see my reply to Kevin:

Try typing:

sudo aticonfig --adapter=all --initialThen reboot, and hopefully things work properly after your rig restarts.

I reinstalled my OS from a clean disc and that solved my hardware issue. All my cards are now showing up fine when I run the CGMINER checks. The only issue I am now having is after I create all the scripts in nano. All the commands work, and I’ve copy pasted them perfectly. I’ve even saved them in the right place. The mining and launch script are in the home folder and I’ve added that Alias to the root folder(.bashrc) But no matter what when I reboot or try to ./miner_launcher.sh nothing happens. I double checked that I entered my username correct, I can’t think of anything else. Any help would be much appreciated?

TL;DR

I copy pasted all the scripts to launch and monitor CGMINER and put them in the right place. For some reason none of the scripts will lauch?

cameron@cameron-desktop:~/Desktop/cgminer-2.11.4-x86_64-built$ ./cgminer -n

[2013-08-27 00:43:21] CL Platform 0 vendor: Advanced Micro Devices, Inc.

[2013-08-27 00:43:21] CL Platform 0 name: AMD Accelerated Parallel Processing

[2013-08-27 00:43:21] CL Platform 0 version: OpenCL 1.2 AMD-APP (1016.4)

[2013-08-27 00:43:21] Platform 0 devices: 3

[2013-08-27 00:43:21] 0 Tahiti

[2013-08-27 00:43:21] 1 Tahiti

[2013-08-27 00:43:21] 2 Tahiti

[2013-08-27 00:43:21] GPU 0 AMD Radeon HD 7900 Series hardware monitoring enabled

[2013-08-27 00:43:21] GPU 1 AMD Radeon HD 7900 Series hardware monitoring enabled

[2013-08-27 00:43:21] GPU 2 AMD Radeon HD 7900 Series hardware monitoring enabled

[2013-08-27 00:43:21] 3 GPU devices max detected

[2013-08-27 00:43:21] USB all: found 14 devices – listing known devices

here is what happens when I try to launch

cameron@cameron-desktop:~/Desktop$ cd ..

cameron@cameron-desktop:~$ cd ..

cameron@cameron-desktop:/home$ ./miner_launcher.sh

Password:

cameron@cameron-desktop:/home$

I give the password and it just goes back to home like nothing happened?

I’m a bit new at this but I’ve been getting over most of the humps, all your help is greatly appreciated! Thanks again!

just got cgminer to run by going its directory and typing ./cgminer

even though my computer recognises 3gpus cgminer only shows one… so i guess we shall start the trouble shooting from here (and the post above)

let me know your thoughts crypto badger, I have 3 7950 sapphire HD’s and the rest of the hardware is identical to the guide.

cameron@cameron-desktop:~/Desktop$ cd cgminer-2.11.4-x86_64-built

cameron@cameron-desktop:~/Desktop/cgminer-2.11.4-x86_64-built$ ./cgminer

[2013-08-27 01:05:49]

Summary of runtime statistics:

[2013-08-27 01:05:49] Started at [2013-08-27 01:00:19]

[2013-08-27 01:05:49] Pool: stratum+tcp://pool.litebonk.com:3333

[2013-08-27 01:05:49] Runtime: 0 hrs : 5 mins : 28 secs

[2013-08-27 01:05:49] Average hashrate: 415.5 Megahash/s

[2013-08-27 01:05:49] Solved blocks: 1

[2013-08-27 01:05:49] Best share difficulty: 5.59K

[2013-08-27 01:05:49] Share submissions: 1

[2013-08-27 01:05:49] Accepted shares: 0

[2013-08-27 01:05:49] Rejected shares: 1

[2013-08-27 01:05:49] Accepted difficulty shares: 0

[2013-08-27 01:05:49] Rejected difficulty shares: 63

[2013-08-27 01:05:49] Reject ratio: 100.0%

[2013-08-27 01:05:49] Hardware errors: 0

[2013-08-27 01:05:49] Utility (accepted shares / min): 0.00/min

[2013-08-27 01:05:49] Work Utility (diff1 shares solved / min): 4.97/min

[2013-08-27 01:05:49] Stale submissions discarded due to new blocks: 0

[2013-08-27 01:05:49] Unable to get work from server occasions: 0

[2013-08-27 01:05:49] Work items generated locally: 48

[2013-08-27 01:05:49] Submitting work remotely delay occasions: 0

[2013-08-27 01:05:49] New blocks detected on network: 2

[2013-08-27 01:05:49] Summary of per device statistics:

[2013-08-27 01:05:49] GPU0 | (5s):0.000 (avg):415.5Mh/s | A:0 R:1 HW:0 U:0.0/m I: 6

Shutdown signal received.

cameron@cameron-desktop:~/Desktop/cgminer-2.11.4-x86_64-built$

You may have already resolved this, but if not, try entering this command:

sudo aticonfig --adapter=all --initialthen reboot, and try cgminer again. Hopefully all of your GPUs will show up properly.

As far as your previous comment on your miner_launcher.sh not doing anything, you won’t actually see anything happen on the command line. It basically starts cgminer miner up in a screen session so that you can monitor it easily by typing “cgm”. If you can type “cgm” (without the quotes) after running your launcher script and see cgminer’s output, then everything is working properly. If not, try copying & pasting your launcher script contents here and I’ll see if I can help.

I am using 3 msi cards they all look ok with -n but when I start cgminer the third is disabled?

kevin@miner:~/cgminer$ ./cgminer -n

[2013-08-25 20:54:53] CL Platform 0 vendor: Advanced Micro Devices, Inc.

[2013-08-25 20:54:53] CL Platform 0 name: AMD Accelerated Parallel Processing

[2013-08-25 20:54:53] CL Platform 0 version: OpenCL 1.2 AMD-APP (1016.4)

[2013-08-25 20:54:53] Platform 0 devices: 3

[2013-08-25 20:54:53] 0 Tahiti

[2013-08-25 20:54:53] 1 Tahiti

[2013-08-25 20:54:53] 2 Tahiti

[2013-08-25 20:54:53] GPU 0 AMD Radeon HD 7900 Series hardware monitoring enabled

[2013-08-25 20:54:53] GPU 1 AMD Radeon HD 7900 Series hardware monitoring enabled

[2013-08-25 20:54:53] GPU 2 AMD Radeon HD 7900 Series hardware monitoring enabled

[2013-08-25 20:54:53] 3 GPU devices max detected

[2013-08-25 20:54:53] USB all: found 13 devices – listing known devices

[2013-08-25 20:54:53] No known USB devices

cgminer version 2.11.4 – Started: [2013-08-25 20:52:40]

——————————————————————————–

(5s):618.7K (avg):589.8Kh/s | A:19 R:0 HW:0 U:18.3/m WU:709.7/m

ST: 2 SS: 0 NB: 1 LW: 9 GF: 0 RF: 0

Connected to stratum.give-me-coins.com diff 64 with stratum as user kevintmckay.1

Block: c491a0f8e7d05dde… Diff:64.1M Started: [20:52:40] Best share: 902

——————————————————————————–

[P]ool management [G]PU management [S]ettings [D]isplay options [Q]uit

GPU 0: 64.0C 1411RPM | 410.3K/303.3Kh/s | A:11 R:0 HW:0 U: 10.61/m I:20

GPU 1: 33.0C 853RPM | 301.0K/320.2Kh/s | A: 8 R:0 HW:0 U: 7.71/m I:20

GPU 2: 31.0C 949RPM | OFF / 0.000h/s | A: 0 R:0 HW:0 U: 0.00/m I:20

——————————————————————————–

[2013-08-25 20:52:37] Started cgminer 2.11.4

[2013-08-25 20:52:39] Probing for an alive pool

[2013-08-25 20:52:42] Error -5: Enqueueing kernel onto command queue. (clEnqueueNDRangeKernel)

[2013-08-25 20:52:42] GPU 2 failure, disabling!

[2013-08-25 20:52:42] Thread 2 being disabled

[2013-08-25 20:52:42] Accepted d06c48e3 Diff 18/16 GPU 1

[2013-08-25 20:52:44] Accepted c737aa3d Diff 18/16 GPU 0

[2013-08-25 20:52:44] Accepted 65eb94a2 Diff 33/16 GPU 0

[2013-08-25 20:52:44] Accepted a7d12442 Diff 49/16 GPU 0

[2013-08-25 20:52:45] Accepted fb639471 Diff 19/16 GPU 1

[2013-08-25 20:52:49] Accepted 2b156636 Diff 29/16 GPU 1

[2013-08-25 20:52:54] Accepted 99a8439c Diff 17/16 GPU 0

[2013-08-25 20:52:56] Accepted 7e8335c7 Diff 41/16 GPU 0

[2013-08-25 20:52:57] Accepted a8fb7109 Diff 33/16 GPU 1

[2013-08-25 20:53:04] Accepted 536817bb Diff 16/16 GPU 0

[2013-08-25 20:53:06] Accepted 8afc76e0 Diff 64/64 GPU 1

[2013-08-25 20:53:11] Accepted 01a618c2 Diff 376/64 GPU 1

[2013-08-25 20:53:29] Accepted da8c73a1 Diff 120/64 GPU 0

[2013-08-25 20:53:31] Accepted 2d0cfb77 Diff 419/64 GPU 1

[2013-08-25 20:53:32] Accepted e3b5199e Diff 159/64 GPU 0

[2013-08-25 20:53:36] Accepted 960f3231 Diff 902/64 GPU 0

[2013-08-25 20:53:36] Accepted 2ff9196d Diff 139/64 GPU 0

[2013-08-25 20:53:39] Accepted 6e541666 Diff 81/64 GPU 0

[2013-08-25 20:53:41] Accepted 56c50fa1 Diff 93/64 GPU 1

Try typing:

sudo aticonfig --adapter=all --initialThen reboot, and hopefully things work properly after your rig restarts.

Hello and thx for tutorial:) I have question about autostart.

I cant undestand

DEFAULT_DELAY=0

if [ “x$1” = “x” -o “x$1” = “xnone” ]; then

DELAY=$DEFAULT_DELAY

else

DELAY=$1

fi

sleep $DELAY

Would be nice if you provide some comments for this.

I have to add to this one. This script works fine. However, the terminal does not open on startup but rather in the background somewhere. Is this the same for everyone else?

Is it possible to have it show as I sometime like to play with the settings as it is running? Like the previous poster I am a little baffled by these commands.

The autostart scripts will start cgminer in a saved screen session so that you can easily monitor it later. After your rig fully starts up, you should be able to type:

cgmat any time to see cgminer’s output. Type control-A, then control-D when you’re finished monitoring cgminer to leave the screen session intact so you can get back to it later.

Basically this is just a bit of code that tells the script to delay starting cgminer by a variable number of seconds. So if you simply type “./miner_launcher.sh” to start your launcher script, cgminer will start immediately since you didn’t pass a delay variable in. If you instead type “./miner_launcher.sh 30”, then the launcher script will pause for 30 seconds before invoking cgminer. In my guide, I recommend passing in a value of 30 in your autostart script – this gives the linux OS to fully start all services before cgminer starts – and also gives you a chance to abort its launch if you need to for some reason.

woooo hooo got my 3 msi 7950’s down to 0.962v!!!! @wall is 754watt

now hashing at 1.96Gh/s

Hi Kevin!

Do you have any information on how you achieved this?

This is the missing piece!!! I have a 5 GPU rig that would save appx. 250 Watt.

Cheers!!!

ok everything stable @ 0.962v for 48 hours now below is config I think it was mostly reducing gpu engine and mem clock currently getting over 600 Khs for each card I will slowly ratchet up gpu engine till failure then back off. Heat and noise are way down at 0.962v three 7950’s are pulling 740W at the wall

“auto-fan” : true,

“auto-gpu” : true,

“temp-cutoff” : “84”,

“temp-overheat” : “81”,

“temp-target” : “77”,

“gpu-fan” : “0-100”,

“intensity” : “20”,

“vectors” : “1”,

“worksize” : “256”,

“kernel” : “scrypt”,

“lookup-gap” : “2”,

“thread-concurrency” : “40960”,

“shaders” : “0”,

“api-port” : “4028”,

“expiry” : “120”,

“gpu-dyninterval” : “7”,

“gpu-platform” : “0”,

“gpu-threads” : “1”,

“gpu-engine” : “1070”,

“gpu-memclock” : “1250”,

“gpu-powertune” : “0”,

“log” : “5”,

“no-pool-disable” : true,

“queue” : “1”,

“scan-time” : “60”,

“scrypt” : true,

“shares” : “0”,

“kernel-path” : “/usr/local/bin”

Hi Frodo

Well it worked well for 12 hours but then became unstable I am trying some different stuff and let you know. Here is the guide I used the under volting worked with the MSI’s but like I said it became unstable.

http://coinrigs.com.au/blogs/news/8170111-custom-bios-for-msi-twinfrozr-7950-oc-sapphire-7950-vaporx

Here is another aticle that looks promising

http://pastebin.com/eBaxQHwa

I wonder if Crypto badger has roms he could share with us

Best of luck

Thanks for posting! I know a lot of people on here are very interested in undervolting… including myself. I hope it goes well!

Just wanted to chime in here. I’ve actually tried these BIOSes (the MSI versions, anyway), and can confirm that they do work properly. 0.962v is a little lower than I’d prefer, and you may have issues getting your GPUs to run stable at much more than 600 kH/sec or so. Still, the power savings are quite significant, and if you’re not comfortable making your own custom BIOS then these are a good choice if you’d like to reduce power consumption under linux.

Thanks for an amazing collection of guides, CrytoBadger! I would probably be nowhere without them.

Been kicking around the idea of flashing my GPU BIOS with the low voltage one from coinrigs.com. Before taking the plunge, however, I ran some tests using TriXX (on Windows).

Running at 1000/1250 MHz (gpu/mem) I couldn’t get under 1.0v though. I was getting about 585kh/s on each of my Sapphire 7950 VaporX cards.

Have to say I’m pretty amazed at their claims of 1100/1400 .962v and 640kh/s

So here’s my question: is .962v an absolute value, or just a baseline that gets dynamically adjusted by the card/driver… perhaps not very transparently?

I need to reconfirm this, but I recall another undervolting test of mine where GPUZ failed to reflect any lowering of VDDC regardless of what I did in TriXX.

Found some interesting info here:

http://www.xtremesystems.org/forums/showthread.php?286778-How-To-vBIOS-edit-your-HD7950-Vapor-X

The author seems to feel that Vapor-X vBIOS was programmed by monkeys on crack.

Aha! I figured out what was happening: seems if you pass gpu-vddc to cgminer and it is rejected by the Catalyst driver, your voltage reverts backs to the default value and not the one you set with Trixx. Pity though, ’cause I liked having it in my bat file just as a reference for my own benefit.

Thanks for this guide. I have setup all the hardware with all of your recommendations. Linux however is stalling my mining rig. I keep getting an error that says “./cgminer: error while loading shared libraries: libudev.so.1: cannot open shared object file: No such file or directory” Also when I type “sudo nano .bashrc” all I get is a blank nano file with no text. Please help me as this is very frustrating.

Which version of linux are you running? It sounds like you may be running 13.04 – some libraries were removed in the 13.x release that are necessary for the mining setup that I describe in my guide. There are workarounds available, but you’ll likely get the best (and easiest) results by using Xubuntu 12.10 or 12.04.

I’m running the 12.10 which I got from your ISO file. The only thing that is different is my version of cgminer which is 3.4.1 . How much would it cost and how long would it take to just order a flash drive from you?

I’m running xubuntu 13.04 and cgminer 3.4.3 without any hassles. Didn’t have to do anything special, I just followed this guide. Maybe something went wrong with your install… Be sure to leave some elbow room in the / filesystem. It was touch-and-go getting it to fit on a 4GB flash disk.

I haven’t really thought about offering pre-configured flash drives. You’d need to have the exact same hardware as me (eg: everything I recommend in my guide) – if you do and you’re still interested, drop me a line and we can discuss.

Same deal here with you Alex. I’ve got the same rig, 12.10 and 3.4.3 cpminer. It happens when I check out the gpu’s with the

./cgminer -n

command. Any thoughts or have you gotten anywhere with that?

Re-installed the os, upgraded to xubuntu 13. Seemed to work.

Seems others have had success by typing the following (though I did not).

I kept on getting an error when trying to install libudev1, so re-installed 12

(did not work) and then installed 13 (worked).

sudo apt-get install libudev1

cd /lib/x86_64-linux-gnu/

ln -s libudev.so.1 libudev.so.0

Thanks for the tip. For now I just bought one from crytobadger. Next time I’ll give in a try.

I can confirm that cgminer 3.3.4 works with xubuntu 12.10. I initially tried cgminer 3.5 (the day it came out) and had similar problems.

Good news for under-volters:

VBE7 – vBIOS Editor for Radeon HD 7000 series cards (excl 7790)

http://www.techpowerup.com/forums/showthread.php?t=189089

This is a windows executable, right? Wine?

It’s windows, yeah. Dunno if it will run in an emulator… Surely you can beg, borrow or steal 5 mins of computer time on a windows PC somewhere 😉 Just have a saved copy of your vBIOS handy, then you just load it up in the proggy, quick edit and save, Done. Then flash as normal (DOS boot & atiflash)

Thanks for the link! This looks really nice – I plan to test at some point soon. If everything works properly I’ll probably feature it in a short write-up. Much better than editing ROMs with a hex editor!

Hi CryptoBadger!

Any updates on the VBE7 yet?

I’m drooling from the possibility to save 50W/GPUs. (Got 3 rigs and 15 GPUs which should be around 750W saved!!!)

Thanks!

Been pretty busy and haven’t had a chance to give it a try yet. Judging from the feedback & comments, it seems like most people are reporting a positive experience with it. If you’ve got dual BIOS, I wouldn’t hesitate to give it a try – just make a copy of your primary BIOS, edit it, and then flip the switch on your GPU to the secondary BIOS position and upload the modified BIOS. If it doesn’t work, just flip the switch back and everything should be back to normal.

Mostly got everything up and running but am running into problems mining.

I am getting two alternating errors. I get the first error and then upon retry, about 30 seconds later, the second error.

[timestamp] No servers were found that could be used to get work from

.

[timestamp] Please check the details from the list below of the serve

rs you have input

[timestamp] Most likely you have input the wrong URL, forgotten to ad

d a port, or have not set up workers

[timestamp] Pool: 0 URL: stratum+tcp://coinotron.com:3334 User: username Password: password

[timestamp] Press any key to exit, or cgminer will try again in 15s.

followed by:

[timestamp] Most likely you have input the wrong URL, forgotten to ad

d a port, or have not set up workers

[timestamp] Pool: 0 URL: stratum+tcp://coinotron.com:3334 User: user Password: pass

[timestamp] Press any key to exit, or cgminer will try again in 15s.

[timestamp] Pool 0 difficulty changed to 256

[timestamp] pool 0 JSON stratum auth failed: [

25,

“Not subscribed”

I have the right website and port (I believe) and the right usernames and passwords. I know that with coinotron, you write the username as username.worker and am doing that.

I have also noticed a discrepancy with the way you have written the startup parameters for mining and how it is described on coinotron’s website. Could this have something to do with it?

./cgminer –scrypt -I 19 –thread-concurrency 21712 -o

stratum+tcp://coinotron.com:3334 -u username -p password

vs. coinotron’s website’s help page:

Stratum LTC pool:

cgminer -o stratum+tcp://coinotron.com:3334 -u workername -p password –scrypt

Also wondering what to make the payment.. pps, pplns, or rbps and what to do with the payout thrteshold on coinotron’s site (when I get ther).

Thanks

Sometimes a sudo reboot will do wonders.

Indeed. =) Are you up and running now?

Up and at em! Thanks!

Hi badger again, I wanna run putty remote control of my rig on my mac pro.. Any suggestions?:]

I have been using Terminal to ssh into my rig using mac… not “remote control” per se, but basically the same thing as using the terminal on the rig itself.

in terminal:

ssh @

whoops. my brackets got messed up by the page…

ssh “rig username”@”rig ip address”

with no quotes

I have tried setting up my own miner with this guide but after 2 attempts with clean OS installs it still will not work. I am following the guide exactly and using coinotron.

When I restart the computer, it does not automatically run cgminer.

When I type ./miner_launcher.sh into the command window, it does not run cgminer.

I am quite frustrated and am grateful for any help that can be given to point me in the right direction.

I am using the hardware in this guide with 3x 7950.

I had trouble getting cgminer to work as well. In the end it was a problem with the version. Ended up using xubuntu 12.10 with cgminer 3.3.4. Anything over that version of cgminer was looking for a library that wasn’t there in xubuntu 12.10. If you post what versions of everything you are using maybe someone can help.

Help!

I am really struggling here getting cgminer to work right with 5 gpu.

I can get one card hashing decently….. (gets close to 600kh/s)

cgminer version 3.3.4 – Started: [2013-10-02 07:00:10]

——————————————————————————–

(5s):373.7K (avg):587.6Kh/s | A:96 R:0 HW:0 WU:448.3/m

ST: 2 SS: 0 NB: 1 LW: 7 GF: 0 RF: 0

Connected to ftc.give-me-coins.com diff 16 with stratum as user brows.1

Block: bde70e254e583f69… Diff:13.7M Started: [07:00:10] Best share: 48

——————————————————————————–

[P]ool management [G]PU management [S]ettings [D]isplay options [Q]uit

GPU 0: 53.0C 4086RPM | OFF / 0.000h/s | A: 0 R:0 HW:0 WU: 0.0/m I:20

GPU 1: 28.0C 4036RPM | 342.5K/685.6Kh/s | A:160 R:0 HW:0 WU:655.7/m I:20

GPU 2: 26.0C 4010RPM | OFF / 0.000h/s | A: 0 R:0 HW:0 WU: 0.0/m I:20

GPU 3: 32.0C 3830RPM | OFF / 0.000h/s | A: 0 R:0 HW:0 WU: 0.0/m I:20

GPU 4: 28.0C 3876RPM | OFF / 0.000h/s | A: 0 R:0 HW:0 WU: 0.0/m I:20

——————————————————————————–

[2013-10-02 07:00:07] Started cgminer 3.3.4

[2013-10-02 07:00:07] Loaded configuration file /home/mami/.cgminer/cgminer.conf

[2013-10-02 07:00:08] Probing for an alive pool

[2013-10-02 07:00:09] Pool 0 difficulty changed to 16

[2013-10-02 07:00:10] Network diff set to 13.7M

[2013-10-02 07:00:10] Error -5: Enqueueing kernel onto command queue. (clEnqueueNDRangeKernel)

[2013-10-02 07:00:10] GPU 3 failure, disabling!

[2013-10-02 07:00:10] Thread 3 being disabled

[2013-10-02 07:00:10] Error -5: Enqueueing kernel onto command queue. (clEnqueueNDRangeKernel)

[2013-10-02 07:00:10] GPU 2 failure, disabling!

[2013-10-02 07:00:10] Thread 2 being disabled

[2013-10-02 07:00:10] Error -5: Enqueueing kernel onto command queue. (clEnqueueNDRangeKernel)

[2013-10-02 07:00:10] GPU 0 failure, disabling!

[2013-10-02 07:00:10] Thread 0 being disabled

[2013-10-02 07:00:10] Error -5: Enqueueing kernel onto command queue. (clEnqueueNDRangeKernel)

[2013-10-02 07:00:10] GPU 4 failure, disabling!

[2013-10-02 07:00:10] Thread 4 being disabled

[2013-10-02 07:00:14] Accepted 9f0a8c03 Diff 36/16 GPU 1

[2013-10-02 07:00:14] Accepted dc307770 Diff 34/16 GPU 1

[2013-10-02 07:00:14] Accepted cdd23ebe Diff 30/16 GPU 1

[2013-10-02 07:00:15] Accepted 7f160d02 Diff 25/16 GPU 1

[2013-10-02 07:00:17] Accepted 9b60771c Diff 47/16 GPU 1

If I do

./cgminer -n

They are all listed….

[2013-10-02 01:58:11] 5 GPU devices max detected

I’ve done this many times:

sudo aticonfig –adapter=all -f –initial

my startup script is:

#!/bin/sh

export DISPLAY=:0

export GPU_MAX_ALLOC_PERCENT=100

export GPU_USE_SYNC_OBJECTS=1

cd /home/mami/cgminer

./cgminer -c /home/mami/.cgminer/cgminer.conf

so it should go right to the conf file which is:

,”intensity” : “20,20,20,20,20”,

“vectors” : “1,1,1,1,1”,

“worksize” : “256,256,256,256,256”,

“kernel” : “scrypt,scrypt,scrypt,scrypt,scrypt”,

“lookup-gap” : “0,0,0,0,0”,

“thread-concurrency” : “0,0,0,0,0”,

“shaders” : “0,0,0,0,0”,

“gpu-engine” : “1050,1050,1050,1050,1050”,

“gpu-fan” : “0-85,0-85,0-85,0-85,0-85”,

“gpu-memclock” : “1400,1400,1400,1400,1400”,

“gpu-memdiff” : “0,0,0,0,0”,

“gpu-powertune” : “0,0,0,0,0”,

“gpu-vddc” : “0.000,0.000,0.000,0.000,0.000”,

“temp-cutoff” : “84,84,84,84,84”,

“temp-overheat” : “81,81,81,81,81”,

“temp-target” : “77,77,77,77,77”,

“api-port” : “4028”,

“expiry” : “120”,

“gpu-dyninterval” : “7”,

“gpu-platform” : “0”,

“gpu-threads” : “1”,

“hotplug” : “5”,

“log” : “5”,

“no-pool-disable” : true,

“queue” : “1”,

“scan-time” : “60”,

“scrypt” : true,

“temp-hysteresis” : “3”,

“shares” : “0”,

“kernel-path” : “/usr/local/bin”

}

I am aware that the thread-concurrency is 0 across the board, but this is the only configuration where I have gotten a card close to 600kh/s with no hw problems.

If I set the thread-concurrency to 21712 for each gnu, I get TWO cards (#1 and #3) working at roughly 300kh/s and no hw problems.

I can even get ALL the cards running, but very crappily…. (massive hw probs…. not even hashing anything at all and hanging the rig up)

cgminer version 3.3.4 – Started: [2013-10-02 07:48:07]

——————————————————————————–

(5s):133.4K (avg):108.6Kh/s | A:0 R:0 HW:114 WU:101.9/m

ST: 2 SS: 0 NB: 1 LW: 16 GF: 0 RF: 0

Connected to ftc.give-me-coins.com diff 32 with stratum as user brows.1

Block: 450eb3897b582097… Diff:13.7M Started: [07:48:22] Best share: 809

——————————————————————————–

[P]ool management [G]PU management [S]ettings [D]isplay options [Q]uit

GPU 0: 44.0C 4033RPM | 33.35K/21.71Kh/s | A:0 R:0 HW:22 WU: 19.3/m I:20

GPU 1: 28.0C 3968RPM | 33.56K/21.71Kh/s | A:0 R:0 HW:18 WU: 17.4/m I:20

GPU 2: 26.0C 3959RPM | 33.60K/21.71Kh/s | A:0 R:0 HW:35 WU: 26.1/m I:20

GPU 3: 29.0C 3796RPM | 64.74K/32.57Kh/s | A:0 R:0 HW:35 WU: 32.9/m I:20

GPU 4: 27.0C 3818RPM | 3.253K/10.86Kh/s | A:0 R:0 HW: 4 WU: 6.2/m I:20

——————————————————————————–

[2013-10-02 07:48:01] Started cgminer 3.3.4

[2013-10-02 07:48:01] Loaded configuration file /home/mami/.cgminer/cgminer.conf

[2013-10-02 07:48:04] Probing for an alive pool

[2013-10-02 07:48:05] Pool 0 difficulty changed to 16

[2013-10-02 07:48:05] Pool 1 difficulty changed to 16

[2013-10-02 07:48:22] Network diff set to 13.7M

[2013-10-02 07:48:34] Pool 0 difficulty changed to 32

Conf file settings for this:

“intensity” : “20,20,20,20,20”,

“vectors” : “1,1,1,1,1”,

“worksize” : “256,256,256,256,256”,

“kernel” : “scrypt,scrypt,scrypt,scrypt,scrypt”,

“lookup-gap” : “0,0,0,0,0”,

“thread-concurrency” : “10000,10000,10000,10000,10000”,

“shaders” : “0,0,0,0,0”,

“gpu-engine” : “1050,1050,1050,1050,1050”,

“gpu-fan” : “0-85,0-85,0-85,0-85,0-85”,

“gpu-memclock” : “1400,1400,1400,1400,1400”,

“gpu-memdiff” : “0,0,0,0,0”,

“gpu-powertune” : “0,0,0,0,0”,

“gpu-vddc” : “0.000,0.000,0.000,0.000,0.000”,

“temp-cutoff” : “84,84,84,84,84”,

“temp-overheat” : “81,81,81,81,81”,

“temp-target” : “77,77,77,77,77”,

“api-port” : “4028”,

“expiry” : “120”,

“gpu-dyninterval” : “7”,

“gpu-platform” : “0”,

“gpu-threads” : “1”,

“hotplug” : “5”,

“log” : “5”,

“no-pool-disable” : true,

“queue” : “1”,

“scan-time” : “60”,

“scrypt” : true,

“temp-hysteresis” : “3”,

“shares” : “0”,

“kernel-path” : “/usr/local/bin”

Please can anyone help? I feel like there is some basic setting or something I am missing here…. the miner seems to be fundamentally flawed. Even a hint would be nice!

Nevermind. I forgot about the dummy plugs! I’m a dummy. I think it is very interesting though that somehow I could get all the cards running by turning the thread concurrency way down, which led me to believe that the problem wasn’t the cards getting idled. Anyway I also took a bunch of the unnecessary stuff out of the conf file and I have been running stable at 3200kh/s for 12 hours now. Thanks again for the guide cb! Hand tight for litebeers!

Glad to hear that you got it working! Interesting that it was the dummy plugs. I still haven’t been able to figure out exactly when they’re required – people have reported both needing them and not needing them on configurations that are pretty much identical.

Looks like the dummy plugs are dependent on driver version. From the cgminer gpu readme:

“Q: I have multiple GPUs and although many devices show up, it appears to be

working only on one GPU splitting it up.

A: Your driver setup is failing to properly use the accessory GPUs. Your

driver may be configured wrong or you have a driver version that needs a dummy

plug on all the GPUs that aren’t connected to a monitor.”

Im having some problems getting my 2nd 7950 running. I had 1 card running great for a month. Added a new one today and it shows up but show no hashing…. Im confused as what to do. I also tried the command sudo aticonfig –adapter=all –initial

after I reboot I try the command below, and it say error – x needs to be running to perform AMD overdrive commands

sudo aticonfig –adapter=all –odgt

any help would be appreciated

Tried dummy plugs? That was an issue with my build.

Try adding a -f to the aticonfig command:

sudo aticonfig --adapter=all --initial -fThen reboot. Sometimes your config settings get messed up and this will force them to be regenerated if you have some invalid info in your current config.

i am still gettting that error message even after the adding the -f

Hi cbadger, got a problem:] I am trying to build 7 x hd 5970 rig on mobo MSI Z77A-GD65 with IVY bridge 1610 intel processor. After xubuntu is done in ‘Step 3: Install AMD Catalyst drivers’ after ‘sudo apt-get install fglrx-updates fglrx-amdcccle-updates fglrx-updates-dev’ is done i do ‘sudo aticonfig –lsa’ it says ‘No supported adapters detected’. Then I tried ‘sudo aticonfig –adapter=all –initial ‘, it says the same thing. Tried reboot- didn’t help. Tried to reinstall Xubuntu-didn’t help. The dummy plugs are in. And i am trying all of it with just two of the hd 5970 connected only at the moment as the costumed frame is not done yet. I have read all of the comments here and through other threads and didn’t find anything yet to help me to sort this issue out. Have you got any idea how to sort it out? Your help would be absolutely appreciated! Thank you in advance!:]

It sounds like the catalyst driver didn’t get installed properly. Did you see any errors at all during the install process?

Nop no errors what so ever.. If you think you could help me to sort this out finally I would send you some litcoins for your work, coz I am loosing the hope. I followed your guide, and another couple of guides and still the same message no matter what I would try to do – ‘no supported adapters detected’. I have invested over £1200 and can’t get it done for the past 3 weeks, I am freaking out now!:]

Hey CryptoBadger – first, thanks so much for this! I built a rig exactly to your specs. But I’ve run into a couple problems that I can’t figure out.

1) Booting. Sometimes it sees the USB drive and boots into Ubuntu fine, but there’s times when it doesn’t see the USB drive at all and just boots into the BIOS. If I plug in a monitor to one of the video cards, I get into the BIOS and can fart around – but I don’t see the USB drive. HOWEVER – if I then save and exit, it boots to the USB drive. This is the ASRock 970 Extreme that you suggested. Any clue why it’s doing this?

2) I have my command line set to the same settings as yours (pointed to a different pool of course, but config data is the same. I have the dummy plugs in. But I’m only getting about 550kh/s. Not a huge deal, just trying to figure out what I could have missed – any guesses (yeah, I know, pretty vague, but I’m at a loss).

3) Is there a way to remote into it while it’s booting to BIOS? Dumb question I’m sure, but it’s such a PITA to plug everything back in every time I restart it (which I’m doing often with all these tweaks). And with it not booting into Ubuntu, it’s really annoying.

4) If I’ve screwed up the BIOS, how do I use that CMOS switch to recover? Do I just hit it and then reboot? Hold it in while rebooting?

Thanks again!

Try putting the USB stick into another USB port. I found that the USB3.0 ports didn’t always work properly, but haven’t had any issues with the USB2.0 ports.

550 Kh/sec is definitely on the low side if you have all of the same hardware that I recommended in my guide. Can you copy & paste your cgminer config/launch settings here?

Unfortunately the only way to manipulate the motherboard BIOS settings that I’m aware of is with a local monitor and keyboard.

The ASRock motherboard has a jumper that you’ll need to short to reset BIOS settings to default. Check the manual for exactly how to do this (should be under “clear CMOS” or something similar), but essentially you’re just moving a jumper over two pins on the motherboard.

The USB port was the issue for the boot issue. It was sporadic on the 3.0 port, but works like a charm on the 2.0 port. Weird.

The command line I use in mine_litecoins.sh is:

gminer –scrypt -I 19 -g 1 -w 256 –thread-concurrency 21712 –gpu-engine 1050 –gpu-memclock 1400 –gpu-vddc 1.087 –temp-target 80 –auto-fan -o stratum+tcp://[MININGPOOL] -u [USERNAME] -p [PASSWORD]

I tried both the 1050 core / 1400 memory and Intensity at 20, but when I tried that I had a lot of hardware troubles – one card would always report “SICK” while running. I backed all the changes out and just left it at the default settings and it’s been running fine, albeit slow.

I wasn’t going to play with the undervolting, as the friend I’m building this for doesn’t have to pay for electricity (jealous!).

Found that CMOS button and got it rebooted, thanks!

Thanks again for all your help!

Hi CryptoBadger, terrific guide, many thanks!

Like a lot of other people here, I have followed your hardware recommendations almost exactly (the only difference on my rig being a Corsair PSU…) and I have a question:

In Step 3 of the guide you wrote:

“After your computer reboots, you can verify that everything worked by typing:

sudo aticonfig –adapter=all –odgt

If you see all of your GPUs listed, with “hardware monitoring enabled” next to each, you’re good to go.”

What do you suggest if I do NOT see “hardware monitoring enabled” ??

When I ran ‘sudo aticonfig –adapter=all –odgt’ after reboot at this step I was presented with:

“Adapter 0 – AMD Radeon HD 7900 Series

Sensor 0: Temperature – 37.00 C

Adapter 1 – AMD Radeon HD 7900 Series

Sensor 0: Temperature – 33.00 C

Adapter 2 – AMD Radeon HD 7900 Series

Sensor 0: Temperature – 30.00 C”

and nothing else…

I had a nightmare of a time installing Xubuntu (install hangs, USB boot inconsistencies, etc.) that resulted in many hours of frustration, but I’ve persevered and managed to get it running so Im really hoping that this is something minor/can be easily fixed!! 🙂

Any help or suggestions at this point would be greatly appreciated.

I noticed in another comment thread that the guide was updated to make it clearer for noobs…perhaps I tripped on the quotation marks used for “hardware monitoring enabled” expecting to see more specific output 🙂

Anyway, after further perseverance, I have managed to get cgminer up and running….briefly.

It reported a failure on one of my GPU’s, and then promptly hung:

cgminer version 3.3.4 – Started: [2013-11-10 22:01:40]

——————————————————————————–

(5s):559.1K (avg):575.1Kh/s | A:2816 R:0 HW:0 WU:478.3/m

ST: 2 SS: 0 NB: 2 LW: 65 GF: 0 RF: 0

Connected to coinotron.com diff 256 with stratum as user Bl4z3r.1

Block: 47f40dcdadb654bf… Diff:82.6M Started: [22:10:17] Best share: 2.11K

——————————————————————————–

[P]ool management [G]PU management [S]ettings [D]isplay options [Q]uit

GPU 0: 72.0C 2750RPM | 247.9K/290.0Kh/s | A:2304 R:0 HW:0 WU:236.3/m I:19

GPU 1: 34.0C 1008RPM | 343.3K/286.1Kh/s | A: 512 R:0 HW:0 WU:250.5/m I:19

GPU 2: 31.0C 930RPM | OFF / 0.000h/s | A: 0 R:0 HW:0 WU: 0.0/m I:19

——————————————————————————–

[2013-11-10 22:04:53] Accepted 314bb9ca Diff 348/256 GPU 0

[2013-11-10 22:04:53] Accepted 7ec19c22 Diff 373/256 GPU 0

Given the difference between GPU0 and GPU1, looks like I’ll be needing some dummy plugs. I have a monitor connected to GPU0 at the moment…

Its been a looong weekend getting this setup, so any tips on why one of my GPUs is failing would be super appreciated.

That’s slightly odd, but I wouldn’t worry about it. Your GPU temperatures are showing up, so hardware monitoring is enabled. I’m not sure why the message is missing.

Seem to be getting consistent failure on my 3rd GPU, but at this point Im actually just pleased to have got the entire rig built and cgminer running.

Just want to say thanks again to CB for a fantastic guide.

Cheers!

Thanks for this guide – very much appreciated.

As a newcomer to ubuntu (12.04 LTS – 64bit) and building first rig got stuck at the line:

./cgminer -n

led to ‘cannot execute binary file’

I’ve installed the ubuntu 64 bit version and I presume that the suggested : cgminer-2.11.4-x86_64 is also 64 bit

I also tried: cgminer-3.7.2-x86_64

same error.

Any help on this would be much appreciated.

I believe that is normally the error that you’d see if the executable that you’re trying to run is compiled for a different architecture than your OS is running (eg: trying to run a 64-bit application on a 32-bit OS).

Are you sure you have 64-bit Ubuntu installed? Type the following at the command prompt:

uname -mIt should say x86_64 if you’ve got a 64-bit install.

Thanks CryptoBadger

You were quite right

It seems I inadvertently installed the 32 bit version of Ubuntu.

Must have got the files mixed up somewhere – ooops!

Got it all going now, moving on to the fine tune section..

Thanks.

Great – glad to hear that you got it working!

Hi,everyone. I’m trying to set up my rig with xubuntu and HD7970. I read through direction but I cannot complete it yet. I’m in step 5. My problem is cgminer doesn’t find out my GPU. I installed the latest one. Does anyone have good idea for this problem? I’m really new to mining so I’m so wired.

So, I ordered the hardware to build my first rig; and while waiting for the kits to arrive, I figured I would build a linux disk with all (if not as many dependencies) configured so as to avoid spending too much time on the OS setup once the hardware is on hand. So I decided to proceed..

Couple of concerns and questions through:

1. For mining purpose, can the disk drive be a bootable media with persistence (say 4 Gigs) as opposed to the full blown OS installed media?

2. Would the OS need to know you are running it on the actual (intended) system for it to gather appropriate libraries etc during the install?

[So I noticed the very first package install failed:

sudo apt-get install fglrx-updates fglrx-amdcccle-updates fglrx-updates-dev

however, upon running ‘update’ and ‘upgrade’, these did complete. All of it was done on an intel “home-use” laptop]

Obviously this is not going to be of any value at this point:

sudo aticonfig –adapter=all –odgt

but, I was expecting this to work:

./miner_launcher.sh

If I don’t sudo, it prompts me for a password.

If I do sudo, it takes me back to the # prompt and does nothing.

Not sure what I’m missing..

Any feedback would be highly appreciated.

Thanks.

Hey Cryptobadger

Can you help me out? I put the Xubuntu iso file onto a 16GB flash drive but my computer will not boot from it. I have all of the settings in the bios setup to boot from the usb. Is there something else that could be wrong?

i forgot to check the box that says notify me of a reply… so I am doing that now